Non-human traffic is larger than most business owners are aware of. This is mainly due to the fact that Google Analytics doesn't report on bots and crawlers by default. Here's how device detection can help you identify bots to serve them different payloads or even block them from accessing the website.

Bot traffic, a problem faced by every website

Protecting your valuable content from bot traffic is indeed a shared concern, regardless of the type of business you are running. The bots can take several forms, such as scrapers, spammers, or various types of crawlers. The most dangerous are probably impersonators which are built to imitate human behaviour and sneakily bypass any security strategy including captchas.

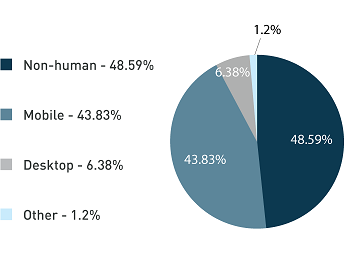

Bots are often used with malicious intent, such as for automated spamming campaigns, spying on competing companies or even for DDoS attacks. These activities can shake any corporate reputation. The efforts to identify and block "bad" bots using various counter-measures can be a costly concern for all businesses. According to our Mobile Intelligence Report from Q1 2016, non-human traffic can represent as much as 48% of the internet traffic. Not a number to neglect.

How DeviceAtlas can help you detect bot traffic

The DeviceAtlas database not only provides the ability to detect mobile phones, tablets and other web-enabled devices, but it also makes it possible to distinguish non-human traffic from legitimate client requests. Having all the traffic intelligence data available in one place is beneficial, allowing you to make decisions on addressing users differently according to device type.

There are many ways in which DeviceAtlas can be used to mitigate non-human traffic. In this article we'll focus on using two server-based modules including HAProxy and NGINX — both supported by DeviceAtlas.

HAProxy

The famous high performance load balancer HAProxy is commonly used in between front-end and back-end to balance payloads and hence it is a perfect place to control incoming traffic. An HAProxy instance allows you to make decisions based on the nature of the traffic, and this is where DeviceAtlas intelligence comes in. Since the 1.6 release, HAProxy can work together with DeviceAtlas via a sample fetch or converter.

DeviceAtlas intelligence is used to create converters which can be freely used for any scenario. The goal in this example is to create an HAProxy rule (ACL) to detect bots and crawlers and block them entirely or redirect them to a different server e.g. a slower one or the one on which certain features are disabled. We need two components to achieve this:

- The HAProxy software itself, available here.

- The DeviceAtlas Enterprise C API is available with a free limited database available here.

To set up the HAProxy module for DeviceAtlas follow the instructions provided in the link above.

Once done, there is a sample configuration below to address our use case using the DeviceAtlas sample fetch and six device properties (since HAProxy 1.7 it is now possible to use up to 12 variables). If one of the properties' returned value equals "true", the request is not coming from a desirable client. The default DeviceAtlas properties character separator is the pipe, so the result will probably look like this:

0|0|1|0|0|0

Then, we use a simple regex to detect any of the characters present.

global ... deviceatlas-json-file /home/dcarlier/sample.json ... defaults ... frontend checktraffic bind *:8080 acl isNonHumanTraffic da-csv-fetch(isRobot,isChecker,isDownloader,isFilter,isSpam,isFeedReader) -m reg .*1.* use_backend users if !isNonHumanTraffic backend users ...

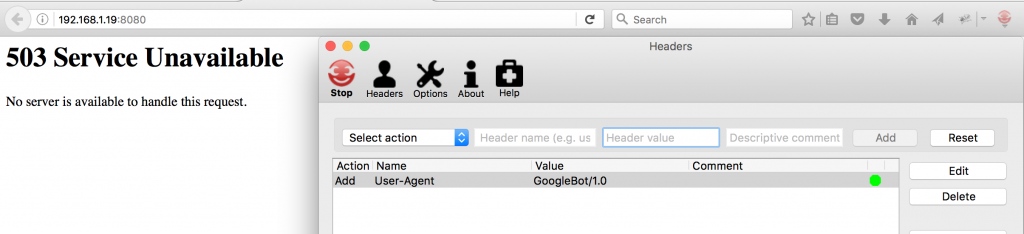

Here is a screenshot showing the result of spoofing the User-Agent to mimic a bot:

To learn more about the DeviceAtlas module for HAProxy configuration, check out this excellent online documentation on GitHub.

NGINX

If preferred, it is also possible to check the nature of the incoming traffic from a back-end point of view. The well-known lightweight, fast web server NGINX can be used with the DeviceAtlas Enterprise C++ API module. As a result, all DeviceAtlas properties from a particular client request are made available for making decisions on redirection within NGINX.

You can build the whole DeviceAtlas C++ module solution either by using NGINX, Lua (an NGINX module), NGINX devel or with Openresty bundle.

In this example we'll focus on the NGINX Lua module which allows for the creation of programmatic rules within NGINX. When DeviceAtlas intelligence is added, it is a very powerful solution to control traffic.

It is possible to trigger logic with Lua, since it has access to NGINX environment variables. Here, we show a simple use case which creates a Lua variable by checking six properties and creating a client cookie for the genuine traffic.

http {

include mime.types;

default_type application/octet-stream;

variables_hash_max_size 2048;

devatlas_db /home/dcarlier/sample.json;

sendfile on;

keepalive_timeout 65;

server {

listen 8980;

server_name localhost;

location / {

default_type 'text/plain';

content_by_lua_block {

local isNonHumanTraffic = (

ngx.var.da_isRobot == "1" or

ngx.var.da_isChecker == "1" or

ngx.var.da_isDownloader == "1" or

ngx.var.da_isFilter == "1" or

ngx.var.da_isSpam == "1" or

ngx.var.da_isFeedReader == "1"

)

if isNonHumanTraffic then

ngx.say("You are a bot !!")

else

local cookieVal = 'USERTYPE='

if ngx.var.da_primaryHardwareType then

cookieVal = cookieVal .. ngx.var.da_primaryHardwareType

else

cookieVal = cookieVal .. 'generic'

end

cookieVal = cookieVal .. ';expires='

cookieVal = cookieVal .. ngx.cookie_time(ngx.time() + 3600)

cookieVal = cookieVal .. ';path=/'

ngx.header['Set-Cookie'] = cookieVal

return ngx.redirect("/user/")

end

}

}

...

}

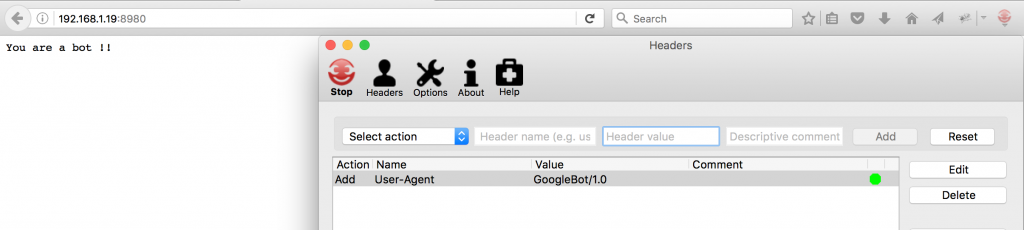

Again, here is the result of mimicking a bot:

Of course, the Lua module offers great flexibility which means that more complex scenarios are also possible. The Lua module Howto will give you more details on what can be accomplished.

Conclusion

As we have seen, DeviceAtlas traffic intelligence can be very useful in protecting your site or service from undesirable visitors. Depending on your needs, you could do this at the load balancer layer or deeper within your infrastructure. Either way, DeviceAtlas intelligence becomes available within the load balancer or web server configuration, allowing for very powerful manipulation of traffic.

Fine-grained control over traffic at this level can be useful in many ways. The most obvious benefit is to enable blocking of certain non-human traffic to reduce costs. Alternatively, the same server capacity can be retained, but work at higher speeds thanks to reduced load. Given that as much as 50% of web traffic is non-human, the improvements can be substantial.

Of course some non-human visitors are beneficial, especially search engine bots, and therefore filtering bot traffic must be done with great caution. DeviceAtlas makes it possible to detect Google, Bing, Baidu, Yandex, and other search engine bots which should be served the same website as is served for all human visitors.

Start detecting web crawlers and bots

Looking for the best method to get a detailed view of all non-human traffic to you site? You can use DeviceAtlas device detection solution and start your trial at no cost.

Check out how DeviceAtlas deals with detecting all bots and crawlers.