This article was originally published on mobiForge.com.

Page weight is the gravity of the web - a relentless downwards drag, ever present and utterly unavoidable. Understanding it is a critical aspect of a successful web strategy.

This series of articles on page weight will address background, measurement and reduction. In this article, part I of the series, we'll talk about the importance of page weight.

Why page weight is important

There are many reasons why page weight is important but, broadly speaking, they fall into two categories: 1) user experience impact and 2) market reach. Let’s take a look at each in turn.

User experience

Load time

Load time is clearly directly impacted by the overall page weight. A heavyweight page will always take longer to load than a lightweight one, all other things being equal. A heavy page is always bad but it is worth noting that excess page weight tends to hurt most at exactly those times when you are relying most heavily on the web. No amount of CDN-hosted resources or client-side caching can avoid the fact that all of the page's assets need to be fetched at some point, and the user's local connectivity is almost always the main bottleneck.

Countless reports published over the years (1, 2, 3, 4, 5) have agreed on a central point: users are extremely sensitive to page load times—the smallest weight change alters traffic noticeably. These reports speak of fractions of a second increases in load time resulting in increased bounce rate and decreased revenues (100ms increase in latency = 1% reduction in sales according to Amazon). Google’s experiments in this area showed that user traffic took months to recover after they deliberately slowed page load times for certain users.

High speed mobile networks are rolling out rapidly but they are starting from a very low base. For most people 3G is the absolute best case, in which case the average page now takes 8-40 seconds to load, in perfect laboratory conditions. TCP’s slow-start congestion control strategy means that real life load times can be significantly slower than predicted by throughput predictions. Note also that 4G availability in a given region doesn’t mean people actually have access to it - some operators charge more for these plans.

It is true to say that careful caching of resources linked from pages means that repeat visitors won’t have fetch everything again but, to paraphrase an old saying, you never get a second chance to make a fast first impression.

Cost

A less often discussed aspect of page weight is cost to the user. Bandwidth is never truly free—even “unlimited” data plans typically have a point at which they are capped or throttled. Admittedly, in many scenarios cost is not a central issue: smartphones are sold with reasonable data plans in many countries and the average user rarely roams. But sometimes cost is absolutely a central issue, to the point that people simply don't visit heavy sites.

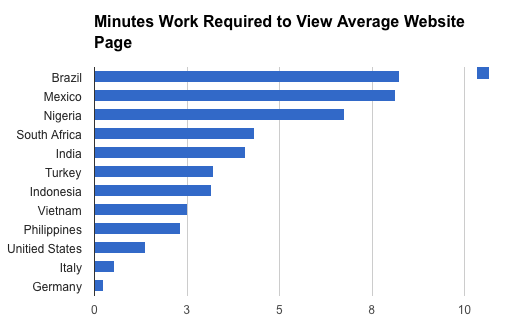

Extending the line of thinking of Time’s article about the light web, Why the Internet’s Next Billion Users Will Be Mobile-Only, the cost in terms of minutes worked of viewing an average-size web page in some select countries is the following:

Is your web page really worth 8 minutes of a person's time?

Expressed this way it's easy to understand why some parts of the web are effectively off-limits for entire countries of mobile browsers.

Market reach

Market reach is the second area where page weight has pivotal impact. Reach is affected in two ways. Firstly, heavy pages tend to work poorly on less capable devices. It is easy to think of smartphones as a homogenous category but in reality there are huge differences in capability between them. Yesterday's smartphone is today's feature phone. Reducing page weight is practically guaranteed to improve the user experience across the board but will disproportionately enhance the experience for less capable devices.

Secondly, slower networks effectively act as market-limiters to paunchy sites. As YouTube’s Chris Zacharias discovered, removing some of the weight from their YouTube's video viewing page literally opened up entire new markets. The whole post is a really worthwhile read but two quotes really stand out:

“Large numbers of people who were previously unable to use YouTube before were suddenly able to.”

“By keeping your client side code small and lightweight, you can literally open your product up to new markets.”

An oft-cited trope in debates about page weight is that mobile networks are getting faster all the time, so the problem will ultimately go away. We've been hearing this argument for at least a decade now. Networks are certainly getting faster but the demands being made on them are increasing apace. The same point was made with the advent of 3G networks years ago, and 2.5G before that. Furthermore, coverage is never complete nor uniform and bandwidth tends to be worst at the precise times you need it most e.g. broken down in the middle of nowhere or trying to find a hotel reservation using airport WiFi.

Finally, heavy pages tend to impact render time and even battery life. More complex pages take longer to fetch and render and hence prevent the radio from powering-down and the CPU from idling. Even almost-imperceptible page load and rendering delays help to build up a hesitation, a slight friction, that raises the question of whether you really want to bother loading a given page, eventually leading to loss of traffic.

Explore the most common misconceptions about building mobile-friendly sites that really improve UX on different devices.

Current status: morbid webesity

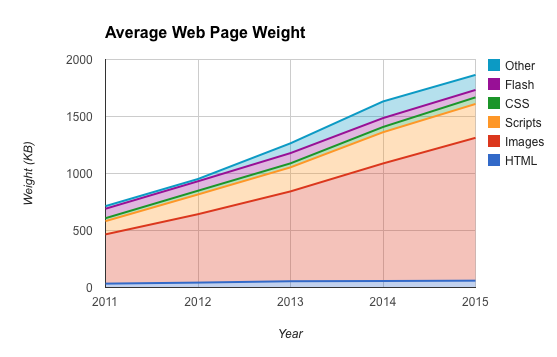

So what's the current status? Let's get the bad news out of the way first: the web has a weight problem, and it's getting worse. According to the invaluable HTTP Archive, at the time of writing the average page size is now just under 2MB, up from 1.7MB a year ago. The components of this weight gain are spread across the board, but images are the chief culprit. The following chart shows 5 years of girth gain.

The web's growing waistline

The data for this chart can be found here. Note that the web archive pie chart figures don't add up to the stated total.

About the only good news here is the slow demise of Flash, unfortunately more than compensated for by gains elsewhere. As always, images are the main cause of the gains over the last few years, closely followed by JavaScript. To some extent the relentless growth of images is understandable, since display resolution has been growing strongly in recent years. Unfortunately, traffic from mobile devices as a percentage of overall traffic has also grown strongly during this same period, leading to an unfortunate confluence of circumstances: page weight is growing strongly at about the least opportune time ever.

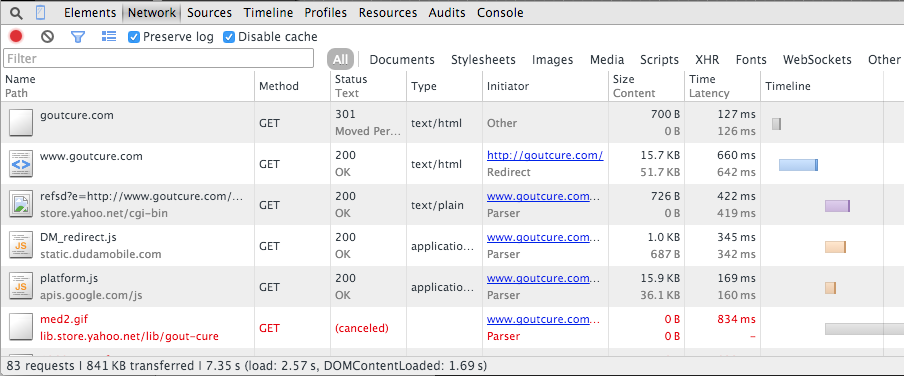

In order to measure and account for these issues we at dotMobi tend to focus on journey weight rather than page weight, since this more accurately reflects the cost (in both time and money) of the eventual page load.

There are some signs that page weight awareness is growing, however.

The high cost of mobile redirects.

Performance budget

There is growing awareness in the web avant-garde of the idea of performance as a feature and setting a performance budget for your site before you even start. Tim Kadlec and Brad Frost have espoused this idea quite a bit recently, to good effect. Getting agreement on a performance budget up front is a really good way of keeping page weight discipline through the project, especially later on when someone wants to add a fancy carousel to a lean page.

Apparent performance vs. real performance

Much recent activity has focused on improving perceived performance. Essentially this means concentrating efforts on aspects of performance that are noticed most, typically optimising pages such that above-the-fold content is loaded and rendered quickly, since that is all the user can see at first. In practice this often means ensuring that the required styling is inlined with the relevant HTML to ensure that the browser has everything it needs from the first response to start rendering. Scott Jehl has a nice write up on this here with a worked example.

There is real value here - it absolutely makes sense to optimise pages around the content that is first seen by the user. That said, optimising perceived performance solves only one aspect of the weight problem (perceived load time) and not the cost and market reach aspects, since everything on the page must be downloaded at some point, regardless of how quickly the initial load is perceived as happening.

One slight downside to some of these techniques is that inlining resources means that the browser can’t cache them for the next visit, though there are techniques for working around this.

Understanding the disconnect

With such a compelling body of evidence making the case for decreasing page weight, it’s worth asking why we seem to be on the wrong track: page weight is increasing inexorably, nobody seems to care. There are probably numerous reasons for this, but some of the more likely ones are the following.

- Creeping featuritus—the bane of the software world. On websites this manifests itself as extra weight. It’s so easy to link in just one more JavaScript library, to add another tracking service but before you know it your site performance is dragging.

- Lack of awareness. Developers may not be fully aware of the weight cost of what they build. Innocuous-looking JavaScript libraries that are incorporated with a single line of HTML often carry a several hundred KB weight penalty.

- Lack of real-world testing—sites tend to get tested on fast devices in well connected locations. There is a big gap between those creating the experiences and those consuming them.

- A general growth in media-rich sites, exemplified by the recent surge in popularity of web fonts, video backgrounds and parallax-scrolling with heavy imagery.

Clearly not all sites are stricken with this problem. It’s remarkable to watch how the web’s top brands such as Google, Etsy and Amazon keep a laser focus on performance because they understand its importance. Google start rendering the results page for a search before they know what you are looking for in order to keep the page load time down.

One more recent piece of positive news is the rumour that Google may soon start to tag slow-loading sites in its search results. Slow loading is not the same thing as heavy, but they are strongly related. There is nothing like SEO impact to get people motivated to fix issues with their site so this could be a very positive development should it come to pass.

Watch out heavy pages, Google is on to you!

Future

There are numerous ongoing initiatives which will help address the web's weight issues. Probably the two most important ongoing changes are the <picture> element and HTTP/2.0.

The <picture> element is the W3C's new image markup element, intended to solve the problem of client-side image selection logic. The <picture> determines which of a set of predefined images are fetched and displayed by the browser without having to resort to JavaScript-based solutions. This new element, if correctly used, can result in very significant weight savings thanks to the fact that images make up about 70% of the weight of most web pages.

Adoption of the picture element will probably be quite slow since working with it requires significant changes in the publishing workflow. Widespread adoption will probably come in the form of updates to popular CMSes such as WordPress and Drupal; bespoke publishing systems may take a lot longer.

The other imminent sea change is the newly-ratified HTTP/2.0 protocol, which became an official W3C standard on February 18th 2015. HTTP/2.0 brings two major improvements related to page weight: efficient use of connections and mandatory compression. HTTP/2.0 has such profound effects on optimisation that many current performance improvement patterns become anti-patterns under HTTP/2.0.

For a given connectivity rate, HTTP/2.0 brings significant efficiency gains through its binary-based protocol and multiplexing of connections, making a given page load faster without any further changes by the publisher. Yes, the same page resources still need to be fetched, but the browser will make much better use of the available connectivity in doing so.

Secondly, HTTP/2.0 makes compression mandatory rather than optional as it was in previous versions of the protocol and extends compression to include headers in requests in addition to payload. Header compression may sound insignificant but it really isn’t—for many page resources such as small icons the headers for a given request are larger than the payload.

Balancing these gains is one significant downside: the migration to HTTPS means that the local web caches operated by ISPs, operators and other organisations will be disintermediated. This means that frequently requested resources will have to be fetched each and every time from their original (or CDN) sources rather than a local cache, increasing pressure to keep page sizes down.

Overall, these changes bring very real benefits across the board and should be celebrated. That said, these improvements benefit people only as fast as support for them spreads to both servers and browsers which unfortunately leaves behind the people most in need—those with slower, older devices that are stuck on the browser version they shipped with. The same caveat applies to the <picture> element—it won't ever work on the very devices that need it most.

One final landscape evolution is the growth in rich JavaScript libraries and the shadow DOM. While these new tools bring great utility they tend to come with a weight penalty, often a silent one. JavaScript libraries tend to be relatively easy to add to a site, but often carry a far higher weight penalty than their one-line copy-and-paste installation would suggest.

Etsy's @lara_hogan: adding 160KB to page increased bounce 12%. Do you really want to add sharing buttons? addthis.com adds ~1MB.

— ronan cremin (@xbs) April 10, 2015Social sharing toolbars are just one example: the 690 bytes of HTML required to add sharethis.com eventually adds 89KB of weight to a page; addthis.com's 128 bytes of include code adds a shocking 980KB in some cases. These libraries tend to get added to sites gradually over time, but the impact not noticed until later, if ever, since those developing the sites tend to use faster, better connected machines than their customers.

Summary

The web has a weight problem. There are ample data proving that users really really care about this, yet page weight is increasing inexorably—in apparent disregard of user wishes. Lightening your site improves the experience for everyone, regardless of device and connectivity, every time they visit the site for as long as the site is around. The argument in favour of taking pains to reduce weight is overwhelming.

In an app-focused era the web is under increased pressure; it makes sense to give the web our best shot. Slow and bloated sites are not the way to compete.

In subsequent articles in this series we'll continue the theme and tell you how to measure and reduce weight.

Article series

- 1. Understanding web page weight

- 2. Measuring page weight

- 3. Reducing page weight

Download the latest Mobile Report

Bringing you the latest developments on the global device landscape.

All statistics represent the share of web traffic in selected countries based on mobile visits tracked by DeviceAtlas.